I came across this cheatsheet that is espcially handy for CIDR ranges: https://oav.net/mirrors/cidr.html. Very handy!

Solving IT puzzles

Wednesday, June 29, 2016

Networking cheatsheet

Yikes, these days I have to deal with a lot of networking challenges. The CIDRs, class-A/B/C subnets/vnets, network security groups, VPN route based/policy based, IKE protocols are terms I need to deal with every day.

I came across this cheatsheet that is espcially handy for CIDR ranges: https://oav.net/mirrors/cidr.html. Very handy!

I came across this cheatsheet that is espcially handy for CIDR ranges: https://oav.net/mirrors/cidr.html. Very handy!

Monday, June 27, 2016

DNX on Ubuntu - in an ARM template - on the Microsoft Azure website

Win! My ARM template that spins up a Linux VM and installs DNX plus a 'hello world' console application has been pulled into the official repository and is now exposed on the azure website !

As you may know - these templates come from the GitHub repository 'Azure Quickstart Templates' : https://github.com/Azure/azure-quickstart-templates/tree/master/dnx-on-ubuntu

Tuesday, May 17, 2016

.NET Console application on Linux - Hello World !

I'm working on a demo to show .NET ( C# ) code on Linux. As you all know, with the new cross-plat .NET execution context DNX that also run on Linux - this should be a breeze. So - let's see what it takes to make .NET run on your Linux machine.

First of all - you need to install the .NET version manager (DNVM) on your machine, which is really simple to do:

I've put up a script on GitHub that will do all of this for you: https://github.com/jochenvw/arm_sandbox/blob/master/ubuntu-aspnetcore/ubuntu_aspnetcore.sh

Also, in this script I create a main.cs file with the 'Hello World' console command.

Indeed - this is C# in Nano on Ubuntu. Pretty cool huh? I've found Nano syntax highlighting for C# - which is probably very similair to JAVA highlighting.

Anyway - after that - you just verify that you've got a DNX installed - do a package restore with the DNX utitility tool (dnu), do a 'dnu build' and then a 'dnx run' to run your application:

I'm making this setup script work unattended with the Azure ARM CustomScript extensions so that you can one-click-deploy a Linux box with DNX installed and this application up-and-running. Then I'll make a pull request for the previously mentioned quickstart repostiory so it's even more easy to get started!

First of all - you need to install the .NET version manager (DNVM) on your machine, which is really simple to do:

I've put up a script on GitHub that will do all of this for you: https://github.com/jochenvw/arm_sandbox/blob/master/ubuntu-aspnetcore/ubuntu_aspnetcore.sh

Also, in this script I create a main.cs file with the 'Hello World' console command.

Indeed - this is C# in Nano on Ubuntu. Pretty cool huh? I've found Nano syntax highlighting for C# - which is probably very similair to JAVA highlighting.

Anyway - after that - you just verify that you've got a DNX installed - do a package restore with the DNX utitility tool (dnu), do a 'dnu build' and then a 'dnx run' to run your application:

I'm making this setup script work unattended with the Azure ARM CustomScript extensions so that you can one-click-deploy a Linux box with DNX installed and this application up-and-running. Then I'll make a pull request for the previously mentioned quickstart repostiory so it's even more easy to get started!

Sunday, May 15, 2016

Quick way to get started with Azure ARM templates

For those who don't fully grasp the power of 'infrastructure as code', there's this awesome github repo out there that allows you to one-click-deploy ARM templates for a whole range of setups. Check it out here: https://github.com/Azure/azure-quickstart-templates .

Go inside one of the folders to see the JSON sources and possible scripts:

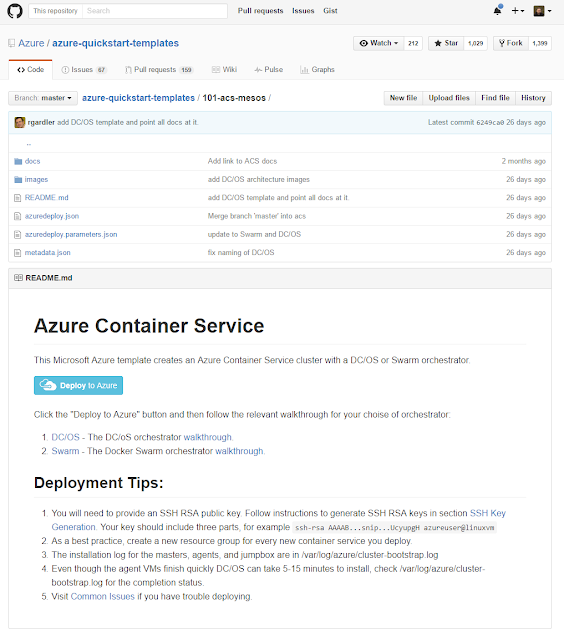

For instance - want to spin up a Linux box on Azure with Mesos on it ? No problem:

By the way - these are the templates that drive the website https://azure.microsoft.com/en-us/documentation/templates/

Got any of your own templates you want to contribute? That too is no problem - just fork and send a pull-request.

Go inside one of the folders to see the JSON sources and possible scripts:

For instance - want to spin up a Linux box on Azure with Mesos on it ? No problem:

By the way - these are the templates that drive the website https://azure.microsoft.com/en-us/documentation/templates/

Got any of your own templates you want to contribute? That too is no problem - just fork and send a pull-request.

Monday, April 11, 2016

Writing high performance C# code with Bart de Smet

Awesome new video from Bart de Smet, going in-depth on high performance C# code. Very interesting stuff.

WRITING HIGHLY PERFORMANT MANAGED CODE from Øredev Conference on Vimeo.

WRITING HIGHLY PERFORMANT MANAGED CODE from Øredev Conference on Vimeo.

Thursday, January 28, 2016

Thursday, January 21, 2016

Git Kraken: new cool Git client for all platforms

Go get your beta invite here: http://www.gitkraken.com/. That's what I did and I was sent a download + access code by AxoSoft to check out their new GIT client.

It worked really well, just out of the box. Wat sets this client apart from the other ones is the sexy looking branch graph of the repository. This is very useful for me, since it allows me to see which developer is working on what on which branch.

Subscribe to:

Posts (Atom)